I’ll start this one with a quote from the Macmillan Dictionary of Psychology 1989, under the entry: Consciousness: “The having of perceptions, thoughts, and feelings: awareness…..Nothing worth reading has been written about it.”

So I apologise for adding to the mess of writing on the topic, but after researching on related ideas for the One Hundred Billion Sparks album project about the brain more widely, some interesting ideas were crystallised which I wanted to get down in words.

Chalmers “hard problem” of consciousness has been one of my favourite ideas since a young age when I first started trying to reconcile the scientific, mechanistic viewpoint of our explanations of the external world with the subjective feeling of the inner world. It’s continued to be a central theme throughout my music too, where I’m often trying to link the feelings associated with musical structures, to video projects concerning things happening externally. I’m not going to get too weighed down in the “hard” vs “easier” problems of consciousness, there’s plenty of good books and videos on that. All I’ll say is that the easier problems are concerned with how the brain works mechanistically, while the hard problem is that of how any mechanistic process of inanimate matter can create a feeling of experience.

There is much disagreement on how to solve the hard problem, or whether it even exists. I’m not proposing another grand solution here, but instead a fragmentation of the problem into some possibly easier sub-problems, which I think are interesting questions in themselves. You will have heard much of this before if you’re a reader of Damasio, Dennett, Hofstadter, Tononi etc, but disclaimers aside, hopefully I can put forward a smidgen of something new.

The Map is Not the Territory

One key idea, is Alfred Korzybski’s dictum “the map is not the territory”, which says our brain states and perception of the external world, is not the same thing as the external world itself. We only have a type of internal simulation of reality, limited by our particular senses and position in the world. When I say “I saw a tree”, you are perfectly happy about that statement. We share a mental model of what a tree is. But of course (bear with me here), if you were a tree (who could speak), you would say “hold on a second, that mental model you have about a brown barky slowly growing thing with leaves and branches and the rest, no matter how detailed it is, is not the same thing as I am”. I’m not proposing trees can speak here, the point is that our mental models of anything, are never really the same as the real things, but we just accept them in order to facilitate communication.

But now bring in the hard problem of consciousness. That is the one thing in the world which we actually experience first-hand. So suddenly this problem with mental models (or “maps” as in the Korzybski quote) is laid bare and we say “hold on a second, that neural correlates of consciousness science stuff you just said, isn’t what I am, in here”.

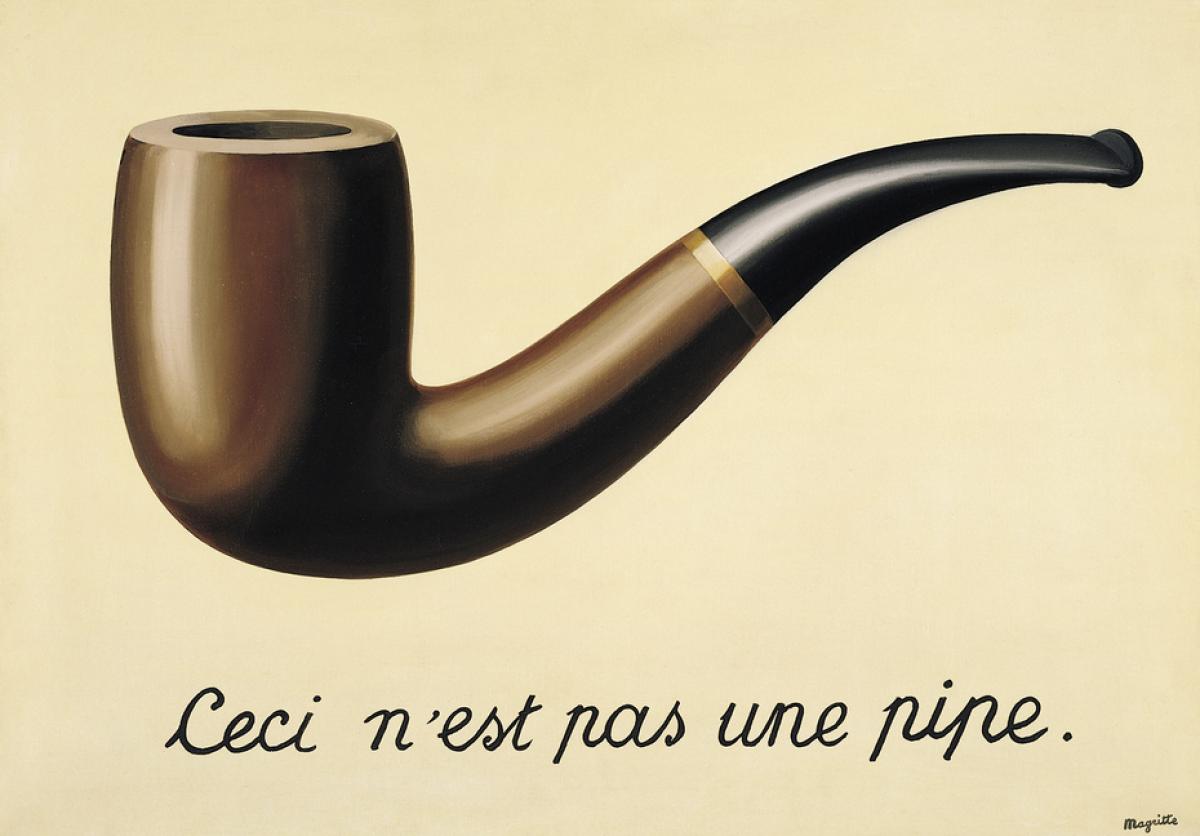

Any written description, model, theory, idea about the mind, can never satisfactorily capture the internal experience of being you, because they are all just models, that is all we have at our disposal for communication, and the map is not the territory. But rather than this being a dead-end to the hard problem, what I think is interesting is that the same reasoning applies to everything else too - concepts of trees and their representative symbols, are not trees themselves (Magritte’s ‘The Treachery of Images’ painting is a famous example of this sort of reasoning too).

It’s just with consciousness that the insufficiency of our system of thought is unavoidable. Put another way, the hard problem has to exist because there is a general inadequacy in our system of mental modelling and symbolism applying to everything physical, which we happily ignore day to day in order to make communication possible, but which is made obvious when we try to create theories of mental experience. So the hard problem should feel hard, and its explanations cannot, by definition, explain it on a totally satisfying intuitive level.

This issue also relates to the commonly held beliefs of dualism, whereby mind and matter are fundamentally different things. The implication being that they have to feel fundamentally different, when it’s just an issue of perspective. Conscious mind being the experience of particular dynamical arrangements of matter, as in the brain. As for why some arrangements of matter should feel like anything, while others do not, is the topic of the next section.

Damasio’s Observer

I’ve read many books on consciousness, often finishing with a feeling of unfulfillment, but not so with Antonio Damasio’s latest book “The Strange Order of Things”. He makes a refreshing feelings and emotions led approach to the topic, not only in terms of the science but also his writing style. I spend so much of my time working with feelings and thinking about how to convey them musically, or analysing music in those sorts of terms, and its always seemed central to what consciousness is, but is often ignored in academic works on the mind.

Damasio puts forward a surprisingly simple and appealing explanation for how our bundle of one hundred billion firing neurones integrated with our body, can create our experience. By breaking down what experience is, into our mental model of a body phantom, observer and valenced feelings localised on this body phantom. It’s called our body phantom because it’s an imagined body we experience of course, as everything we experience is imagined/created by our brains with input through the senses to help out. And it’s called a phantom because there are amusing experiments with VR and more basic techniques involving mirrors or fake appendages which can make participants place their conscious experience inside another person or assign the experience of their arm to that of a rubber fake as in the “rubber hand illusion”. The message from Damasio being that we build a model of our physical self, and we also build models of valenced sensations (on a scale of positive or negative association), localised to the body phantom in different areas, along with a model of an observer, us, sitting somewhere behind the position of the eyes in the body phantom.

This may all sound too simplistic in this short form, and I recommend reading Damasio’s book for a fuller explanation, but the message I took from it was a nice argument that to create subjectivity, we simply need to simulate the subject. For me however, this was all hinged on one key assumption which was not discussed - to simulate something makes it exist.

For this argument we treat the brain as simulating things in a sense, even though of course we know it doesn’t operate like our normal computers, being a non-digital, hugely parallel, system for example. But I think the idea it models and simulates things in some analogous sort of way is a fair assumption, especially given our work with neural network simulations and their capacity to compute in a comparable way to traditional digital computers. Part of my PhD research involved exactly this, where I made a model of gene regulatory networks, which are similar in functionality to neural networks in a general sense. And I trained my networks to perform basic logical operations with pairs of inputs. I found that extremely simply networks with only 3 or 4 nodes could perform many of the same basic logical operations which are the fundamental building blocks of all computers, the NAND gate for example, which are made physical by transistors.

So, if our brains simulate our body, observer and localised valenced sensations, and if we accept that simulating something, makes that something “exist”, then we’re off! Except that’s a pretty prickly sentence right there and maybe you’re screaming out that you don’t accept that simulating something makes it exist in a proper, meaningful way, so let’s look at that in some more detail.

It from Bit

A starting issue here is what we already looked at in the map is not the territory section. If we simulate a tree, no matter how well we do it, it’s never the same thing as a real tree. So in that sense, simulating the tree does not make a tree exist. But what if the thing we’re simulating has no real-world physical counterpart on which it is based? Take the game, Grand Theft Auto 5, for example. I think we’d all agree that GTAV exists, but the inert physical disc you receive it on is not what GTAV is. The act of simulation creates it, and there is no more real version than that. GTAV is an emergent outcome of the interaction of atoms of the disc with that of your computer.

It was suggested to me that maybe GTAV only exists when someone is playing it. But what if we set up some sort of computer v computer mode and let it run in the absence of any humans, I’d say it still existed, with the caveat of refusing to go down any wave collapse or tree falling in the woods madness as part of more general arguments on realism and existence outside of our presence (see the notes on meaning and realism). So, there are some points to argue here, but I feel relatively comfortable for now, with the standpoint that simulating something with no other real world counterpart, makes that thing exist, in as much as it can do. And even if we deny this is true, it still seems to me to be an interesting and more tractable fragment upon which the hard problem is dependent.

The Map IS (not) the Territory

For this class of simulated emergent phenomena which have no physical counterparts, we have turned the map and territory argument on its head, whereby now the map is the territory, in the sense that the most meaningful definition of something, is its simulated form.

Going back to our mental experience and the hard problem, given that has no external physical world counterpart and our mind does not exist out there in the world, but only inside our actively simulating brain, perhaps the act of brain simulation of the form proposed by Damasio is all that is needed to create it. While remembering that these sorts of explanations can never intuitively feel full and correct, because I’m talking to you via a system of symbols and models, which are not the same thing as the actual simulation of you which you are experiencing right now.

So we have both map/territory interpretations in operation simultaneously - the map is the territory, in that your experience is the simulation of it, and the map is not the territory in that my explanation of this system is not the same as the system itself. This sort of situation reminds me of an interesting quote I read recently attributed to Nils Bohr, along the lines of “an ordinary truth is one whose opposite is false, whereas a profound truth is a statement whose opposite is also a profound truth”.

A Note on Meaning

A lot of this seems to hinge on the idea of meaning. In the case of whether a simulated game exists, we can argue that without any human ever there to qualify its existence, it’s just more bouncing particles no different from the next bunch of randomly bouncing particles which have no possible emergent structure. Or in the case of the hard problem and Damasio’s model we have the issue of where the meaning sneaks in to say these bouncing particles are simulating a body phantom, a subject, and valence.

But the “meanings” we are talking about here are no more than the logical structures themselves, the logical framework of information processing which makes a game or simulates a conscious observer. Whether or not we give the label of “meaning” to those systems, they are carrying out the information processing all the same.

This is all reminds me of Douglas Hoffstader’s writing on this topic, and in particular the arguments over Godel’s incompleteness theorem, whereby Godel created a whole new interpretation, and layer of meaning on top of what was supposed to be Whitehead and Russel’s bedrock of unquestionable truth and structure in mathematics in Principia Mathematica. My understanding of this being that once Godel had demonstrated the logical consistency of his interpretation of their system, it was impossible to deny the truth, or existence, of his proof which destroyed the aims of the project as a whole. I don’t want to get weighed down with the details of Godel’s Incompleteness here, the point I’m interested in is the idea that meanings are contained in existing systems, whether or not we’re aware of them.

A Note on Realism

A related method to pull down my argument about the existence of simulations would be to deny realism, the idea that the world out there exists whether or not we are checking. This seems a common-sense assumption, but many respectable physicists will deny realism, and opt for a system whereby the world we are aware of is created by our measurements. If we deny realism here, then perhaps a simulation can only exist when we are there to qualify it, in which case, my argument of how Damasio’s model relates to the hard problem does not stand. I’m fairly comfortable with this angle as I mentioned earlier though, and in fact this link to realism only strengthens my stance personally, because two ideas I find counter-intuitive can be linked and thrown out together: Both anti-realism, as explained, and anthropocentricity, where we have this human-centred model requiring our presence to yield nature. History has shown that when we put ourselves in too central of a position within a natural system, we tend to be wrong and find out we’re not as important as we thought. Both of these note topics are fairly thorny though, maybe I’ll be swayed by a good counter argument in future. But again, for me they are interesting ideas to which the hard problem, and many other questions, relate, whatever side we fall on.

So, what?

So, the hard problem has to feel hard, even if it’s not. And perhaps all that is needed is exactly the sort of information processing we are already aware of, with no special ingredients, if we accept our limited intuition, and the power of information processing and emergence to create. For me this does carry a sort of weak pan-psychism to it, in that all matter has the seed of capacity for self-awareness, even though it’s only structured in brains, so far. I expect many of you will have many questions and criticisms about all this, so I look forward to a late night chat about it sometime soon.